Optimization and Linux Version

I spent some time over December and January working on various things as the making itch struck, and I ended up being frustrated that I couldn't play my own game on the laptop I'd brought with me that runs linux. I spent a few evenings prodding at my messy jam code until I had something that runs smoothly on my low power linux laptop.

The first challenge was that I only had made a Windows build for the Jam. This was an easy fix, since the was built with the cross-platform Bevy Engine I was able to simply install the compiler and some prerequisites, pull my repo, and build. The game technically worked fine, unfortunately due to sloppy jam code it ran at an unplayable speed of about 10-15 frames per second even when built in release mode.

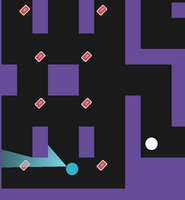

First step of optimizing is determining the slow code paths. In this case, I was able to quickly find that my custom lighting was the slowdown here by disabling systems in the engine until I saw improvements. While other systems were slow, the one for calculating the visibility polygons that are used to render the shadows was the slowdown here. Once slow path was identified there are a few standard debugging approaches to use.

The 'hammer' approach I used in the jam was just throwing more cores at the problem so that the excessive compute time can take less real time. During the 1 week jam this game was originally made in I had observed some performance issues, and because the designs of the Rust language and the Bevy engine made it easy, I just parallelized the light computations to quickly buy some frames. This worked, but as was observed by some players lead to extremely high CPU usage.

The two new approaches I adopted now that I had time to properly consider the problem were to

1) do less of the expensive thing

and

2) make the expensive thing cheaper

In the jam version, every enemy always computes its visibility polygon. I actually started this process by adding a little UI indicator to help me understand the problem better and see when my solution was working. I find little debug HUD elements like this to be massively helpful in seeing a problem like this and verifying the solution, particularly since if this worked there would be 0 visible on-screen difference to the game. Then I added a quick circle/rectangle intersection checker to use to see if each 'light source'/enemy was potentially visible on screen, and if it was I flagged it for needing an update. I chose this design because it could be easy to extend later. For example, I could update the circle/rectangle collision to consider the facing direction of the enemy since the spotlight only eminates from one side. I could also use my "needs update" flag in more ways, such as lights that never move in scenes without dynamic objects requiring shadows. Unfortunately, this game requires a lot of moving objects that cast shadows so this solution would end up being messier. Fortunately, neither of these extensions to the idea are needed since now we are down to 4 or fewer light updates in most of the game, which most systems should be able to comfortably handle with the existing parallelism and the other updates.

Now that the expensive task was being done closer to only when necessary, I still wasn't happy with the performance on my low-end laptop. It was now closer to a playable framerate, mostly (but not always) above the critical 30fps mark. The next step of optimization is when the algorithm nerd in me gets excited. Now it's time to put the thinking cap on and figure out if the way the light calculations are being done can be improved. The bulk of the lighting work is being done in an external crate called geo-visibility. I did some testing and found that the performance scales pretty steeply based on the number of objects in the scene (it looks like the curve is greater than O(N) but it's hard to be certain). I'm making the assumption that its at least O(N log N) because I think a sort is needed for this algorithm. With this in mind, the clear way to optimize this is to reduce the N of the algorithm, in this case by bringing down the number of objects in the scene that need to be lit.

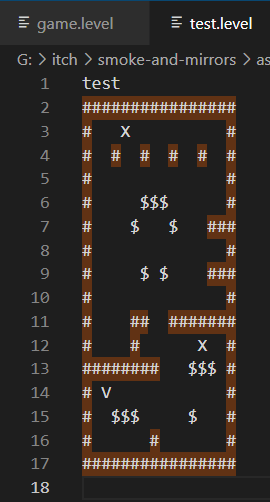

My first idea was to only send the subset of objects to each light that were within the range it should care about, but this quickly got messy and resulted in a lot of copies existing in memory and some nasty performance characteristics without any real gain. The next approach was to extend the level building tools I used so that levels could be pre-processed. My level 'editor' for this project was the old faithful notepad and a hacked-together bit of code to convert this kind of ascii into game objects. I built a custom bevy asset type for my level files and just started building it quickly as I only had a few hours left to work.

Each hashtag represents a wall block, an X is an enemy, a $ is a card, and the V is the player. This format is very fragile and if anything is wrong things break spectacularly, but these text files are in the game and it does just load a text file called "game.level" from the assets folder so if you want to poke around and break my code have fun. Also everything loads in upside down. Does this count as mod support?

The key optimization to be done here is "each hashtag represents a wall block". That is a huge number of wall blocks even on this very small level. If we know at some point the lighting calculation has to sort that, this small test level has 85 blocks and the main game world has 722. But this is clearly nonsense they are static rectangles. I wrote a level pre-processor that takes the 85 walls and combines and stretches them. Because of the design of the level being mostly thin walls, I only built it to combine along a single axis. Just this simple pre-processing on the level reduces the number of objects on the test level from 85 to 12. If the lighting is O(N^2) that means this could be theoretically 50x faster even on the small level.

While I could optimize further and allow blocks to stretch in multiple directions and cull lights more aggressively, with a couple of simple improvements that didn't change the fundamental code at all I was able to comfortably achieve my performance target of 60fps at native fullscreen on my laptop that wasn't even able to run this game before. I'm really happy with the results here and hope this walkthrough of my process and mistakes can help people write code that is less bad than my code.

And if you want to see the source and recoil in horror - https://github.com/jacobmcleman/rusty__jam

Leave a comment

Log in with itch.io to leave a comment.